The age of information is upon us, but with it comes a growing concern of AI generated content, especially deepfakes. These AI-generated images and videos can be disturbingly realistic, seamlessly replacing a person’s face in existing footage to create entirely fabricated scenarios. Deepfakes pose a significant threat, as they can be weaponized to spread misinformation, damage reputations, and even disrupt elections. Researchers found an unexpected technique for help: astronomy.

The Challenge of Deepfakes

Deepfakes leverage artificial intelligence to manipulate images and videos. They work by superimposing a target person’s face onto existing footage of another person. The results can be incredibly convincing, blurring the line between reality and fabrication. This ability to create such realistic forgeries is precisely what makes deepfakes so dangerous. Malicious actors can exploit this technology to spread disinformation, erode trust in institutions, and even influence elections by creating fake videos of political candidates making damaging statements.

For instance, imagine a deepfake video of a world leader appearing to declare war on another nation. The potential for panic and destabilization is immense. Authorities are keenly aware of this threat and are actively seeking ways to combat deepfakes.

Astronomical Techniques Can Spot Inconsistencies with Light

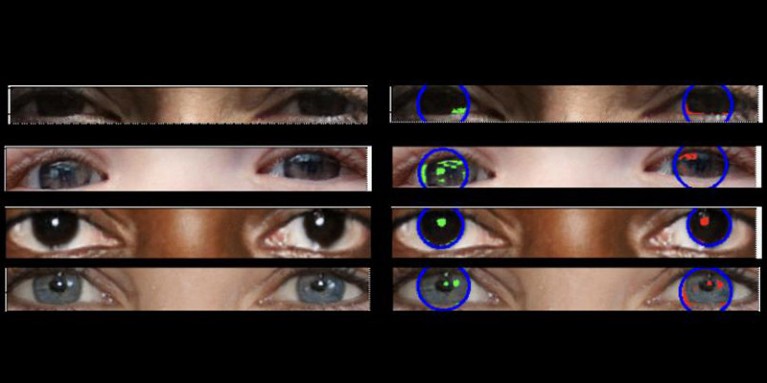

The solution may lie in a rather surprising field: astronomy. Researchers at the University of Hull, UK, have developed a novel technique to detect deepfakes by analyzing light reflections in the eyes. The key concept hinges on the principle of “consistent physics.” In genuine photographs, light reflects in both eyes in a similar way, even though the reflections won’t be perfectly identical. Deepfakes, however, often struggle to replicate this consistency, creating inconsistencies in the way light interacts with the eyes.

These inconsistencies are subtle, requiring a keen eye and sophisticated tools for detection. This is where the researchers’ astronomical background comes into play. They leverage techniques typically used to analyze light in galaxies to pinpoint these discrepancies in deepfakes. The research, led by Adejumoke Owolabi, utilizes two specific methods:

- CAS System: This method quantifies the concentration, asymmetry, and smoothness of light distribution within an object. Traditionally used by astronomers to characterize the light of distant stars, the CAS system proves adept at identifying inconsistencies in the way light reflects in deepfake eyes.

- Gini Index: This metric measures the inequality of light distribution within an image. Originally used by astronomers to analyze the distribution of stars within galaxies, the Gini index finds a new application in detecting the uneven light patterns characteristic of deepfakes.

By comparing the light reflections in an individual’s eyes, the researchers were able to achieve a 70% accuracy rate in differentiating real from deepfake images. The Gini index proved to be a more reliable indicator than the CAS system.

Limitations and the Road Ahead

While promising, the research does acknowledge limitations. The technique is not without its shortcomings. There’s a possibility of encountering false positives (mistakenly identifying real images as deepfakes) and false negatives (failing to detect deepfakes). Additionally, as pointed out by astronomer Brant Robertson, deepfake creators could potentially adapt their models to circumvent this detection method by specifically focusing on mimicking realistic eye reflections.

However, the underlying approach holds significant merit. Researchers like Zhiwu Huang believe that analyzing subtle anomalies in lighting, shadows, and reflections across the entire image could complement existing deepfake detection methods and enhance overall accuracy. This research paves the way for further exploration of cross-disciplinary collaborations in the fight against deepfakes.

The battle against deepfakes is an ongoing one, requiring constant innovation and adaptation. This research by astronomers demonstrates the potential of looking beyond traditional boundaries to find solutions. By harnessing the power of seemingly unrelated fields, we can develop a more robust defense against the ever-evolving threat of deepfakes.