In the ever-evolving landscape of Artificial Intelligence, Google is taking a significant step to tackle disinformation by launching SynthID, a groundbreaking digital watermarking tool. SynthID, developed by Google’s AI division, DeepMind, aims to spot images created by AI, effectively addressing the challenge of distinguishing AI-generated content from real ones.

The Battle Against Misinformation

In a world where AI is becoming increasingly adept at producing lifelike images, the potential for misinformation has surged. Google’s answer to this challenge is SynthID, a novel approach that embeds subtle changes to individual pixels within an image. This invisible watermark is virtually undetectable by human eyes but is a straightforward computer identifier. The initiative aligns with Google’s commitment to responsible AI development and fostering trust between creators and users.

The Imperceptible Yet Powerful Watermark

In the past, individuals and entities utilized conventional watermarks, like logos or text, to signify ownership and discourage unauthorized utilization. However, these techniques prove inadequate for AI-generated images, as they permit effortless editing or cropping. SynthID takes a different route, ensuring the watermark remains intact after various modifications like color changes, resizing, or applying filters. This robustness empowers users and organizations to identify AI-generated content more reliably.

How SynthID Works

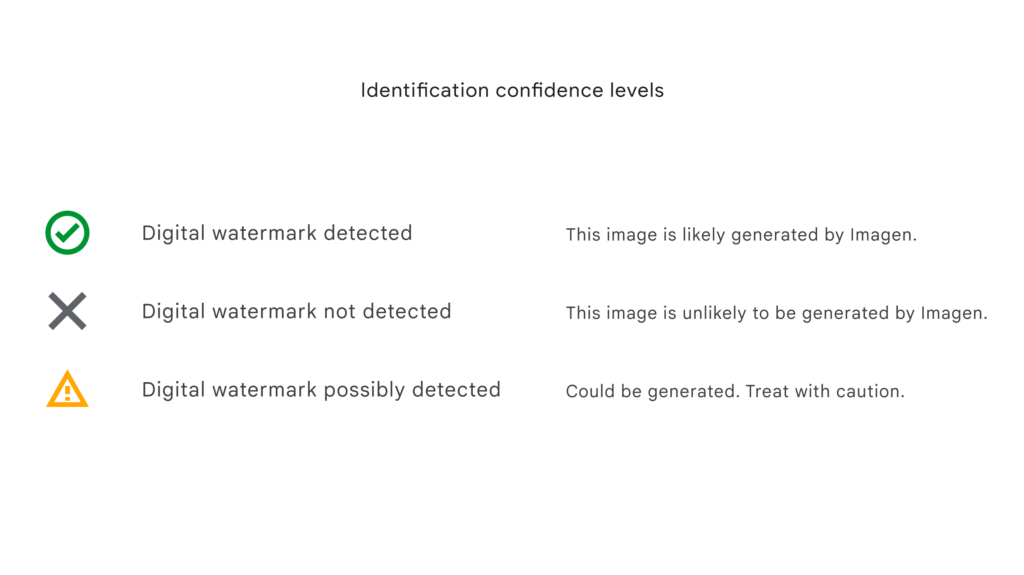

SynthID operates through a combination of watermarking and identification processes. AI-generated images produced by Google’s Imagen model receive an invisible watermark embedded within the image’s pixels. Upon scanning the image, SynthID can assess the likelihood of it being AI-generated based on the presence of the digital watermark. This innovative approach enhances the identification of AI-generated content while preserving image quality.

A Step Towards Responsible AI Development

While SynthID isn’t entirely foolproof against extreme image manipulation, it represents a significant leap forward in pursuing responsible AI development. Its introduction reflects Google’s commitment to ensuring the safe use of AI and combating the potential misuse of AI-generated content. Google DeepMind and Google Research collaborated to fine-tune the technology, showcasing the company’s dedication to refining and advancing the tool.

Looking Ahead: The Future of SynthID

SynthID’s launch is marked as an “experimental” phase, highlighting Google’s intention to gather user insights to enhance its efficacy further. With SynthID, Google is not just stopping at images; the technology’s potential extends to other mediums like audio, video, and text. This versatility underscores Google’s ambition to provide comprehensive solutions for identifying various forms of AI-generated content.

Towards Collaboration and Standardization

While Google’s initiative is commendable, experts argue that more coordination and standardization are needed across the AI industry. Different methods for identifying AI-generated content can create complexity in an intricate information ecosystem. Industry partnerships and initiatives, like the voluntary agreement Google joined in the US, can promote a more unified approach to combatting misinformation and ensuring transparency.