Large language models (LLMs) are complex AI systems that power chatbots, generate text, and translate languages. However, a new study reveals a concerning trend. Big tech companies are misleading users by labeling their LLMs as “open source” while withholding crucial information. This lack of transparency hinders scientific progress and raises concerns about bias and misuse.

“Open Weight” vs. Truly Open Source

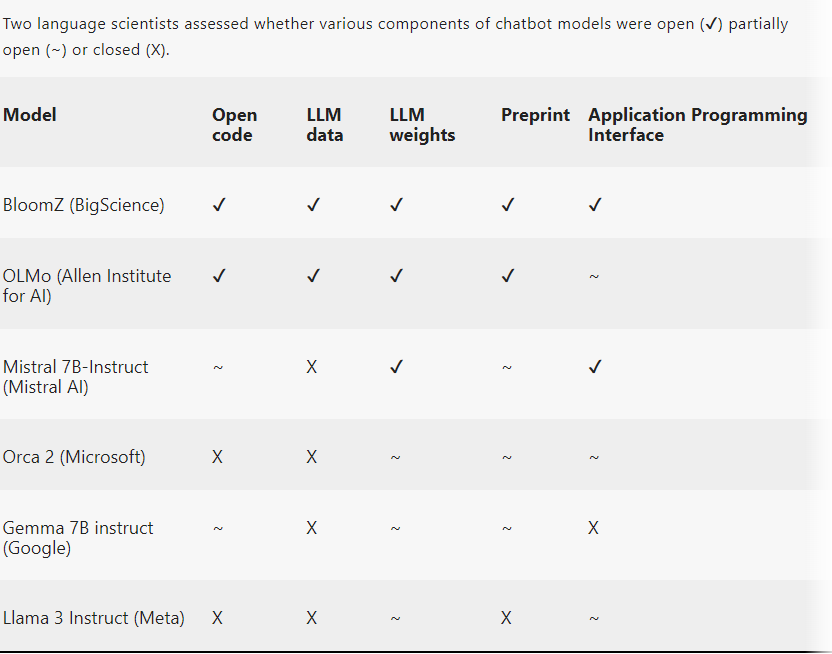

Researchers Mark Dingemanse and Andreas Liesenfeld analyzed 40 LLMs, and their findings expose a troubling practice. Many models, including those from Meta (Llama) and Google (Gemma), are classified as “open weight” but not truly open source. This distinction is critical. “Open weight” simply means users can access the trained model, but the code and training data – the building blocks for understanding how the model works – remain hidden.

This lack of openness creates a significant roadblock for researchers. Imagine trying to improve a car without access to the engine or blueprints. Similarly, without access to the code and training data, researchers cannot effectively analyze, modify, or replicate LLMs. This stifles innovation and hinders scientific progress in the field of AI.

Open Washing: Misleading Claims

The study highlights a concerning tactic by big tech companies: “open washing.” Companies like Meta and Google are accused of using the term “open source” loosely to gain a reputation for transparency without actually delivering on it. This approach allows them to reap the PR benefits of appearing open while maintaining control over their models.

Dingemanse emphasizes the importance of clear terminology. “Existing open-source concepts can’t always be directly applied to AI systems,” he acknowledges. However, the lack of transparency goes beyond mere terminology. Companies are criticized for resorting to blog posts with cherry-picked examples or corporate preprints lacking detail instead of rigorous scientific papers with peer review. This approach raises questions about the true motivations behind these LLM releases.

BLOOM is Truly Open Source

The analysis is not all doom and gloom. The study identifies BLOOM, an LLM developed through an international academic collaboration, as a shining example of true open-source practice. BLOOM provides full access to its code, training data, and documentation, allowing researchers to scrutinize, modify, and build upon the model. This level of openness fosters collaboration and innovation, accelerating advancements in LLM development.

The EU’s AI Act

The European Union’s recently passed AI Act is poised to significantly impact how “open source” is defined for LLMs. The act exempts open-source models from extensive transparency requirements, placing the onus on defining what constitutes “open source” in this context. This is likely to be a contentious issue, particularly regarding access to training data. Companies may lobby for a definition that allows them to maintain control over their data, potentially hindering true openness.

Why Being Truly “Open Source” Matters?

Transparency is important for building trust in LLMs. Without understanding how these models work and the data they are trained on, it is impossible to assess potential biases or misuse. For example, an LLM trained on biased data may perpetuate discriminatory outputs. Open access to code and data allows researchers to identify and address such issues, ensuring responsible development of AI.

Openness fuels scientific progress. By allowing researchers to tinker with and improve upon existing models, the field of AI can advance more rapidly. Open-source LLMs like BLOOM demonstrate the power of collaboration and knowledge sharing in driving innovation.

Redefining Openness and Prioritizing Science

The future of LLM development hinges on a clear definition of “open source” that prioritizes transparency and scientific rigor. The EU’s AI Act presents an opportunity to establish a framework that encourages responsible development and discourages misleading practices. Additionally, a return to emphasis on peer-reviewed scientific papers is crucial to ensure the quality and reliability of LLM research.