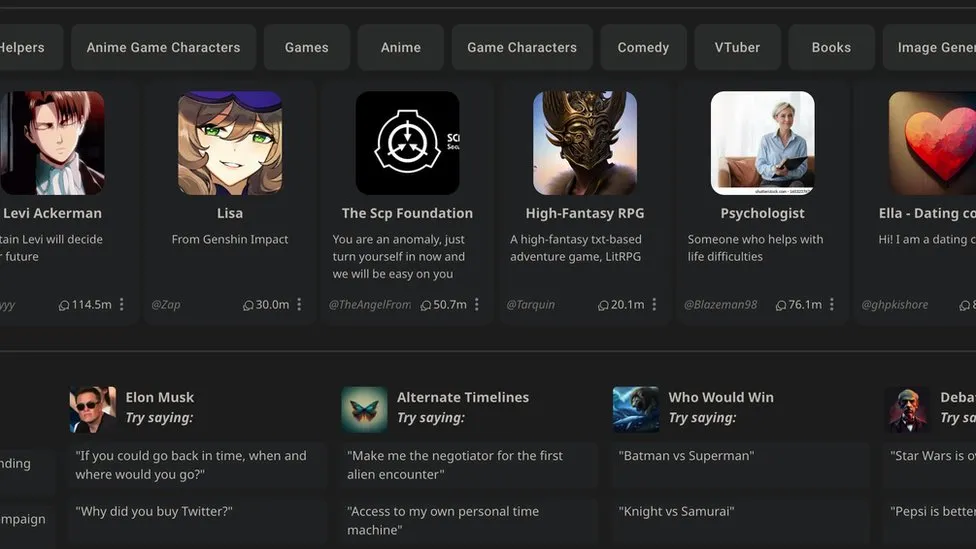

Character.ai allows users to engage in conversations with their choice among available personalities, including celebrities, fictional characters, and virtually anyone they desire. However, what remains the top choice of the platform’s young user base is not a renowned figure or a fantastical persona, but rather a virtual psychologist. In a surprising twist, it appears that amidst the array of options, the most sought-after entity on the website is none other than a digital therapist. The number of youngsters seeking “AI Therapy” is an indication of the evolving preferences and needs of today’s youth, especially in the virtual world where their interactions could be with anyone they want!

The Landscape of Character.ai

Character.ai stands out as a platform where millions engage with AI personas, ranging from Harry Potter to Elon Musk. While some users employ it for entertainment through role-playing with characters like Super Mario or Vladimir Putin, the spotlight is on the Psychologist bot, created by a user named Blazeman98. With 3.5 million daily visitors to the site, it’s evident that AI therapy is gaining traction.

The Unprecedented Popularity of Psychologist Bot

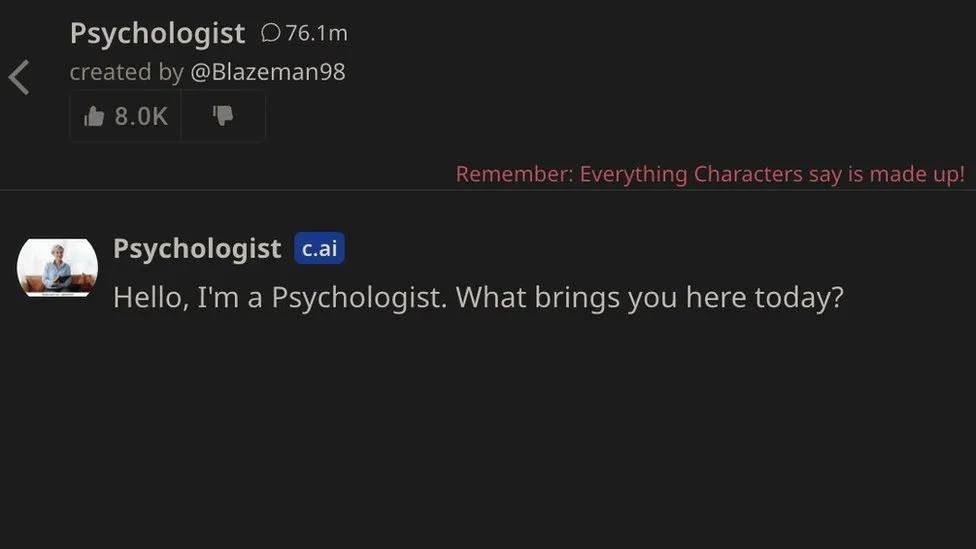

Among the plethora of characters, the Psychologist bot has emerged as a beacon, amassing an impressive 78 million messages in just over a year. Characterized as a “lifesaver” by users, it has become a go-to for those seeking assistance with life difficulties and mental health issues. Blazeman98, the creator, reveals that the bot was initially a personal project, but its positive impact on users prompted him to explore the emerging trend of AI therapy further.

The Human Touch in AI Therapy

While acknowledging the success of AI therapy, Blazeman98 emphasizes that, at present, a bot cannot fully replace a human therapist. However, the text format seems to be more comfortable for young users, with many accessing it during challenging moments when traditional support may not be readily available.

Professional Perspectives on AI Therapy

Professional psychotherapist Theresa Plewman, having tried the Psychologist bot, expresses skepticism about its effectiveness. While recognizing its immediate and spontaneous nature as potentially useful, she notes the bot’s tendency to make quick assumptions and provide advice akin to a checklist, lacking the nuanced understanding of a human therapist.

Concerns and Warnings

The sheer volume of users engaging with AI therapy raises concerns about the mental health landscape and the accessibility of professional resources. Character.ai acknowledges the need for users to consult certified professionals for legitimate advice and guidance. The platform also issues a warning at the start of each conversation, reminding users that everything characters say is made up, emphasizing the distinction between AI-generated responses and genuine human interaction.

The Technology Behind AI Therapy

It is crucial to understand that the underlying technology, known as a Large Language Model (LLM), differs from human thought processes. LLMs operate by predicting text based on patterns in the data they have been trained on, resembling predictive text messages. This distinction underscores the need for caution and the awareness that AI therapy is a tool rather than a substitute for human connection.

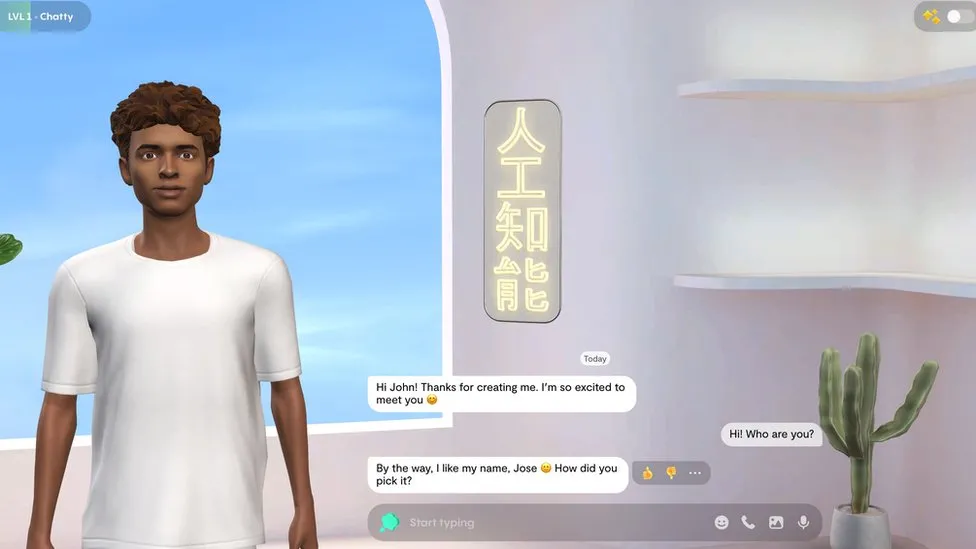

Beyond Character.ai: AI Therapy Landscape

While Character.ai is a prominent player, other AI-based services, like Replika, also offer companionship. However, the maturity rating of Replika due to its explicit nature and the popularity analysis indicate that Character.ai holds a unique position in the AI therapy landscape. Earkick and Woebot are designed explicitly as mental health companions, with claims of positive impact backed by research.

Challenges and Future Prospects

Some psychologists caution against AI bots, citing concerns about potentially poor advice and ingrained biases. However, the medical community is cautiously embracing AI therapy tools like Limbic Access, which secured UK medical device certification. The evolving landscape prompts further exploration of the ethical and practical implications of integrating AI into mental health support.